Hey team, I have the integration that sends a daily dump of our subscription info into Cloud Storage.

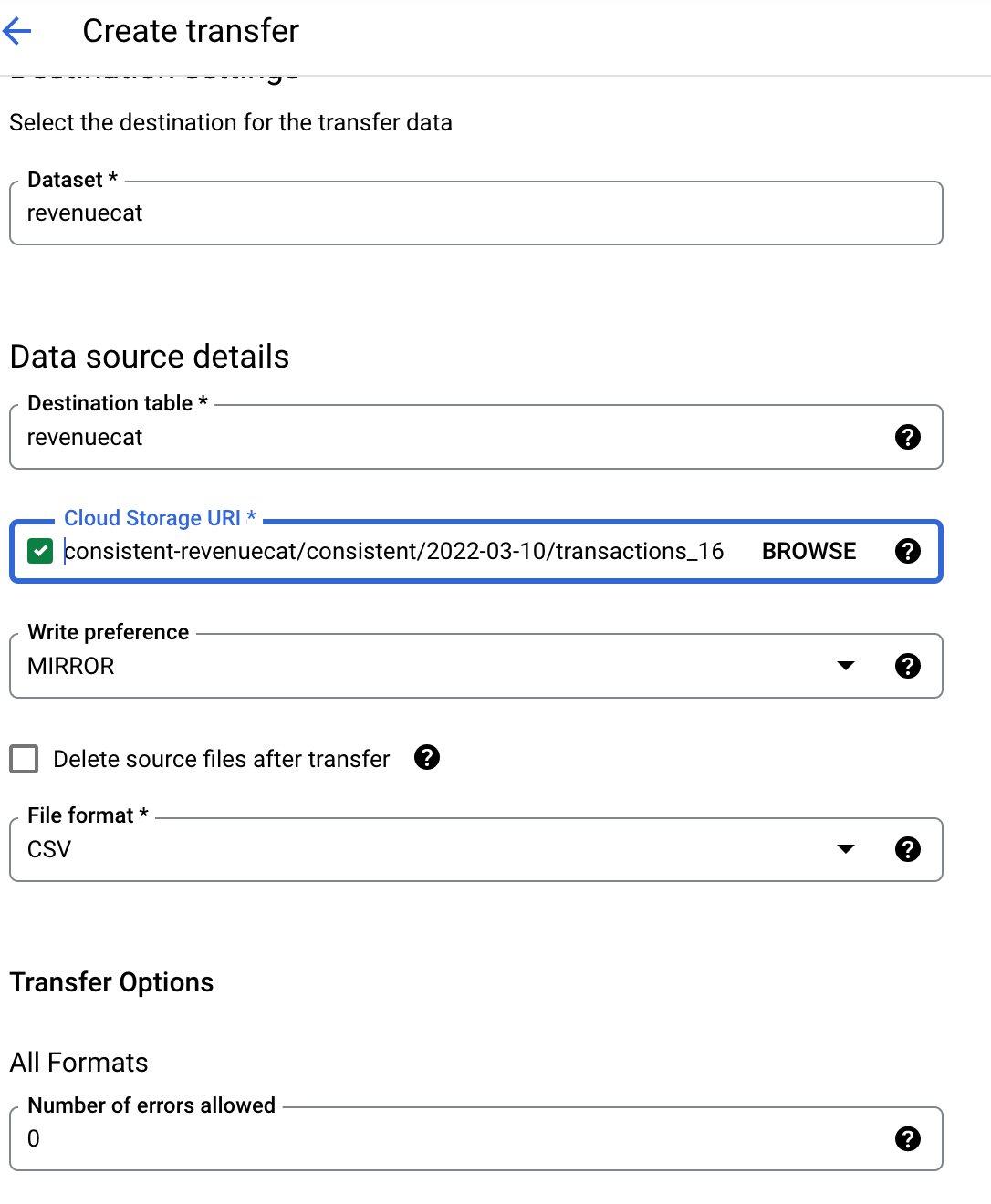

Now, I’d like to create a daily job that imports this into BigQuery. I look at the “Data Transfer” Configuration, and am a bit stuck with “Cloud Storage URI”:

How could I get it to _only_ get the CSV for a certain date string.

I know this is more of a Google Cloud question, but I’d guess everyone who exports to Storage wants to do this too, so thought I’d ask here: if you could share your data transfer configuration with me would appreciate it!